Sawback Pick and Place

ROS | SLAM | Autonomous Navigation | C++ | MoveIt | Robitc Manipulation

Description

This project is a proof of concept that we are able without any human intervention navigates in a room, detect objects that are not where they should be, and return them to their original position. The robot is able to navigate around the lab space autonomous, there is a mode of patrol, when this mode is activated the robot will go back and forth in a certain area. If an item is detected then the robot will pick the object and based on which object is detected it will autonomous navigate to the area of drawers, box containers, or shelves and place the object where it belongs.

Demonstration of sawback opening a drawer

Overview

The hardest part of this project was the integration of all the components(robots and sensors) we have and fuse them. The robot named sawback is 2 different robots. The first one is Ridgeback an omnidirectional mobile platform on which the robot Sawyer was attached to it, thus the name of our robot is Sawback. Each robot has its computer and if we add the developer’s computer we have in total 3 different computers that each one has to execute specific instructions and communicate with the others. Another problem to overcome was the packages that were used, in total there were almost 80 different packages! Split in different workspaces. So extended workspaces had to be created that link the whole project together and machine tags to specify what is being executed where.

Autonomous Navigation

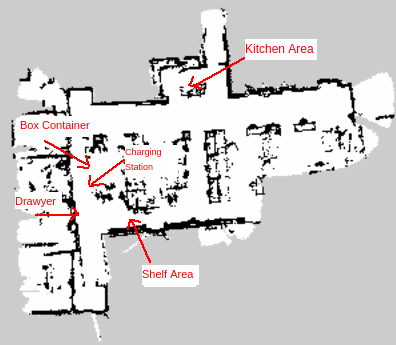

As the first step in this project, I created the map of the room that I was working on(Figure 1). This room was the lab of my University. To create the map, a Velodyne lidar sensor was used and library rtabmaps to SLAM and convert our 3D point cloud to a 2D map.

Fig. 1 Map Creation

After the creation of the map (Figure 2) specific points were saved. MoveBase action was used, Hokoyu front/rear and Velodyne lidar sensors help us to avoid any collisions. The points that were saved are :

- Kitchen Area

- Charging Station

- Shelves Area

- Box container Area

- Drawer Area

Fig. 2 Lab Map

Patrol

In the project, there is a special mode that gets activated with a service. When patrol mode (Figure 3) is on the robot will start from its original position and go forward for about 5 meters and return to the starting position again and again. If an object is detected then the robot will stop and the robotic arm manipulation will start.

Fig 3 Patrol MODE.

Robotic Arm Manipulation

To pick the objects I wanted to use some sort of color detection and the gpd library (grasping position depth) but due to limited time, I placed an April Tag on each object. The robot when the April tag gets detected will get aligned with it and stop at a set distance from it. Moveit CPP was used to manipulate the arm and grasp the object. In Figure 4 you can see some examples of grabbing a dice cube.

Fig 4 Pick Item.

When the object has been picked from the arm, then based on which object we got we will navigate to the proper location and then place it in a specific container. This container could be a box on top of a table, shelves, or a drawer. More specific for the drawer manipulation because it requires some extra precision to open it, an extra April Tag was used to know the exact position of the drawer. For the detection of AprilTag, a bumblebee camera was used facing the floor.

Results

Below we see the project implementation in different scenarios

Demonstration of sawback placing an item in a box

Fig Sawback Shelf Manipulation

Demonstration of sawback placing an item in a box

Fig Sawback Box Container Manipulation

Demonstration of sawback opening a drawer. Here the robot will follow these steps.

- Grab the object and place it on top of the drawer

- Place the gripper inside the handle.

- Move forward and open the drawer.

- Go back and then forward to get re-aligned.

- Grab the object from the top of the drawer.

- Place the object inside the drawer.

- Move forward set arm positions and then backward to close the drawer.

Fig Sawback Drawer Manipulation